Data-tier application in SQL Server defines the schema and objects that are required to support an application.It is really very simple.

There are two ways you can implement a DAC:

Here is how you extract using the SQL Server Management Studio in SQL Server 2012.

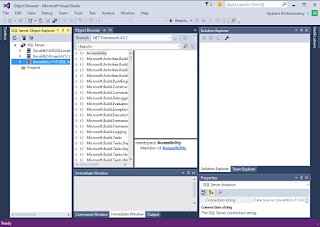

Connect to SQL Server on your computer. Here is the Object Explorer of a named instance of SQL Server 2012.

Dac_01

We now create a DAC using the Northwind database.

Right click Northwind to open the menu and the sub-menu as shown.

Dac_02

Click on Extract Data-Tier Application... to display the Introduction screen of the Wizard.

Dac_03

The above screen pretty well describes the actions we take. It has the three steps:

Dac_04

It appears that I have already created a file with that name and I will extract another with a different name NrthWnd.

Dac_05

Click Next. Displays the validation and summary page of the wizard.

Dac_07

Click Next. after a bit of creating and saving animation the process either succeeds or fails.

Dac_08

Click finish (after you get to see the Success of the operationb) and the DAC page is saved to the location indicated.

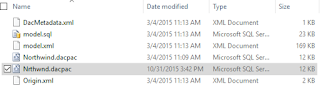

Dac_09

DAC files can be unpacked with programs shown.

Dac_10

There are two ways you can implement a DAC:

- Using Microsoft Visual Studio 2010 with a SQL Server Data-tier application project type

- Using the extraction utility in SQL Server's Extract Data-tier wizard.

Here is how you extract using the SQL Server Management Studio in SQL Server 2012.

Connect to SQL Server on your computer. Here is the Object Explorer of a named instance of SQL Server 2012.

Dac_01

We now create a DAC using the Northwind database.

Right click Northwind to open the menu and the sub-menu as shown.

Dac_02

Click on Extract Data-Tier Application... to display the Introduction screen of the Wizard.

Dac_03

The above screen pretty well describes the actions we take. It has the three steps:

- Set the DAC properties

- Review object dependency and validation results

- Build the DAC Package

Dac_04

It appears that I have already created a file with that name and I will extract another with a different name NrthWnd.

Dac_05

Click Next. Displays the validation and summary page of the wizard.

Dac_07

Click Next. after a bit of creating and saving animation the process either succeeds or fails.

Dac_08

Click finish (after you get to see the Success of the operationb) and the DAC page is saved to the location indicated.

Dac_09

DAC files can be unpacked with programs shown.

Dac_10